What Is NLP (Natural Language Processing)?

Natural Language Processing, or NLP, is a subset of artificial intelligence (AI) that focuses on the interaction between computers and humans through natural language. The objective of NLP is to read, decipher, understand, and make sense of the human language in a valuable way.

NLP is a multidisciplinary domain involving computer science, AI, and computational linguistics. It is used to understand the complexities of human language, such as understanding context, language structure, and meaning. NLP algorithms are designed to recognize patterns in data and turn this unstructured data format into a form that computers can understand and respond to.

There are many practical applications of NLP, from speech recognition and machine translation to sentiment analysis and entity extraction. NLP is behind many of the technologies that we use on a daily basis.

What are Large Language Models (LLM)?

Large Language Models, or LLMs, are machine learning models used to understand and generate human-like text. They are designed to predict the likelihood of a word or sentence based on the words that come before it, allowing them to generate coherent and contextually relevant text.

LLMs are an evolution of earlier NLP models. They have been made possible by the advancements in computing power, data availability, and machine learning techniques. These models are fed large amounts of text data, typically from the internet, which they use to learn patterns of language, grammar, facts about the world, and even achieve reasoning abilities.

The primary functionality of LLMs is their ability to respond to nuanced instructions and generate text that can be indistinguishable from text written by a human. This has led to their use in a wide variety of applications, most prominently in a new generation of AI chatbots that are revolutionizing human-machine interaction. Other applications of LLMs include text summarization, translation, writing original content, and automated customer service.

LLM vs. NLP: 6 Key Differences

Scope

NLP encompasses a broad range of models and techniques for processing human language, while Large Language Models (LLMs) represent a specific type of model within this domain. However, in practical terms, LLMs exhibit a similar scope to traditional NLP technology in terms of task versatility. LLMs have demonstrated the ability to handle almost any NLP task, from text classification to machine translation to sentiment analysis, thanks to their extensive training on diverse datasets and their advanced understanding of language patterns.

The adaptability of LLMs stems from their design, which allows them to understand and generate human-like text, making them suitable for a variety of applications that traditionally relied on specialized NLP models. For example, while NLP uses different models for tasks like entity recognition and summarization, an LLM can perform all these tasks with a single underlying model. However, it’s important to note that while LLMs are versatile, they are not always the most efficient or effective choice for every NLP task, especially when specific, narrowly-focused solutions are required.

Techniques

NLP uses a wide variety of techniques, ranging from rule-based methods to machine learning and deep learning approaches. These techniques are applied to various tasks, such as part-of-speech tagging, named entity recognition, and semantic role labeling, among others.

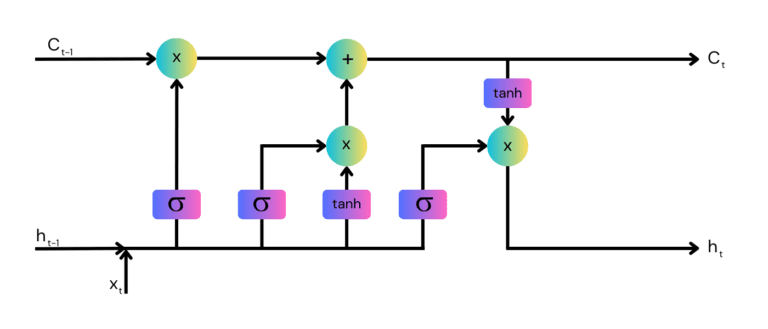

LLMs, on the other hand, primarily use deep learning to learn patterns in text data and predict sequences of text. They are based on a neural network architecture known as Transformer, which uses self-attention mechanisms to weigh the importance of different words in a sentence. This allows them to better understand context and generate relevant text.

Performance on Language Tasks

LLMs have been able to achieve remarkable results, often outperforming other types of models on a variety of NLP tasks. They can generate human-like text that is contextually relevant, coherent, and creative. This has led to their use in a wide range of applications, from chatbots and virtual assistants to content creation and language translation.

However, LLMs are not without their limitations. They require massive amounts of data and immense computing power to train. They can also be prone to generating inaccurate, unsafe, or biased content, as they learn from the data they are fed. Without specific guidance, these models lack an understanding of the broader context or moral implications.

In contrast, NLP encompasses a wider range of techniques and models, some of which may be more appropriate for certain tasks or applications. In many cases, traditional NLP models can solve natural language problems more accurately and with lower computational resources than LLMs.

Resource Requirements

LLMs need a significant amount of data and computational resources to function effectively. This is primarily because LLMs are designed to learn and infer the logic behind the data, which can be a complex and resource-intensive task. LLMs not only train on massive datasets but also have a very large number of parameters, in the billions or hundreds of billions for state of the art models. As of the time of this writing, training a new LLM is highly expensive and outside the reach of most organizations.

Most NLP models are able to train on smaller datasets relevant to their specific problem area. In addition, there are many NLP models available that are pre-trained on large text datasets, and researchers developing new models can leverage their experience using transfer learning techniques. In terms of computational resources, simple NLP models such as topic modeling or entity extraction require a tiny fraction of the resources needed to train and run LLMs. Complex models based on neural networks require more computational resources, but in general, compared to LLMs, they are much cheaper and easier to train.

Adaptability

LLMs are highly adaptable because they are designed to learn the logic behind the data, making them capable of generalizing and adapting to new situations or data sets. This adaptability is a powerful feature of LLMs as it allows them to make accurate predictions even when faced with data they haven’t seen before.

Traditional NLP algorithms are typically less flexible. While NLP models can be trained to understand and process a wide range of languages and dialects, they can struggle when faced with new tasks or problems, or even language nuances or cultural references that they haven’t specifically been trained on.

Ethical and Legal Considerations

Ethical and legal considerations play a crucial role in the use of both LLM and NLP. For LLMs, these considerations often revolve around the use of data. Since LLMs require a significant amount of structured data, there are serious privacy and data security concerns. It’s important for organizations training or using LLMs to have strict data governance policies in place and to comply with relevant data protection laws.

Another primary concern is about the safety of AI systems based on LLMs. The exponential improvement in performance and capabilities of LLM models, and the stated goal of many in the industry to improve their abilities until they achieve artificial general intelligence (AGI), raises major societal and even existential concerns for humanity. Many experts are concerned that LLMs could be used by bad actors to conduct cybercrime, disrupt democratic processes, and even cause AI systems themselves to act against the interest of humanity.

In the case of NLP, ethical and legal considerations are simpler, but still significant. Since NLP is often used to process and analyze human language, issues such as consent, privacy, and bias can arise. For example, if NLP is used to analyze social media posts, there could be issues related to consent and privacy. Additionally, if the training data used for NLP contains biases, these biases could be replicated in the NLP’s outputs.

Learn more in our detailed guide to large language model architecture (coming soon)

LLM and NLP: Using Both for Optimal Results

While there are distinct differences between LLM and NLP, the two can be used together for optimal results. For example, NLP can be used to pre-process and perform simple inferences on text data while LLMs can be used for higher-level cognitive tasks. By using both technologies, organizations can gain a deeper understanding of their data and make more informed decisions.

A good example of the combined use of NLP and LLM technologies is the Google search engine. This is a massively complex system that regularly parses and analyzes the content of the entire internet. Many aspects of Google search, such as the crawling and indexing process, the knowledge graph, and analysis of links and social signals, are based on traditional NLP techniques. In addition, Google uses Bidirectional Encoder Representations from Transformers (BERT), an early LLM, to process every word in a search query in relation to other words in a sentence. This gives Google a much deeper understanding of a user’s search queries.

Testing and Evaluating LLM and NLP Models with Kolena

We built Kolena to make robust and systematic ML testing easy and accessible for all organizations. With Kolena, machine learning engineers and data scientists can uncover hidden behaviors of LLM and NLP models, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes not weeks. Kolena’s AI / ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.

Among its many capabilities, Kolena also helps with feature importance evaluation, and allows auto-tagging features. It can also display the distribution of various features in your datasets.

Reach out to us to learn how the Kolena platform can help build a culture of AI quality for your team.