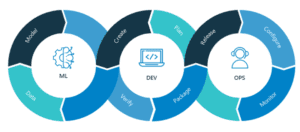

What Is an MLOps Pipeline?

An MLOps pipeline refers to the set of practices, tools, and workflows designed to streamline the development, deployment, and maintenance of machine learning models in production. It bridges the gap between machine learning model development and its operationalization, ensuring models are scalable, maintainable, and deliver value.

MLOps pipelines aim to automate the machine learning lifecycle, integrating with existing CI/CD frameworks to enable continuous delivery of ML-driven applications. They ensure consistency in model performance, allowing for seamless model updates, versioning, and thorough monitoring of models in production.

3 Stages of the MLOps Process

1. Design

In the design stage of an MLOps process, the primary focus is on defining the problem, setting objectives, and planning the overall strategy for the machine learning project. This involves identifying the business goals, understanding the data sources available, and determining the key performance indicators (KPIs) that will measure the success of the project.

This stage includes a thorough assessment of the technical requirements and the infrastructure needed to support the machine learning lifecycle. Decisions regarding the computational resources, data storage, and access permissions are made, keeping scalability and security in mind.

2. Model Development

The model development stage is where data scientists and machine learning engineers collaborate to create ML models that address the identified business problem. This phase begins with exploratory data analysis (EDA) to understand the characteristics and the distribution of the data. Insights gained during EDA guide the selection of modeling techniques and algorithms suitable for the task at hand.

Once an initial model is built, the process of model training and tuning begins. This involves feeding the model with training data, adjusting its parameters, and using validation techniques to measure its performance. The goal is to develop a model that not only performs well on the training data but can also generalize to new, unseen data. This iterative process requires a balance between model complexity and generalizability to avoid overfitting or underfitting.

3. Operations

The operations stage focuses on deploying models to production and ensuring they operate efficiently and effectively in a real-world environment. This involves setting up the infrastructure for model serving, such as deploying models as RESTful APIs or integrating them into existing applications. The deployment process is automated and monitored to ensure a seamless transition and operation.

Post-deployment, continuous monitoring and management of the model are essential. This includes tracking performance metrics, detecting model drift, and implementing strategies for model retraining and updating when necessary. The operations phase is an ongoing process that requires collaboration between data scientists, DevOps, and IT professionals.

MLOps Pipeline Architecture and Components

Data Collection and Ingestion

The first step in the MLOps pipeline focuses on gathering raw data from various sources and preparing it for further analysis. This step ensures the availability of quality data, which is critical for building effective machine learning models.

Automating data ingestion helps in handling large volumes of data and enables real-time processing. It involves validating, cleaning, and sometimes anonymizing data before it enters the pipeline, ensuring data integrity and compliance with privacy regulations.

Data Preparation and Feature Engineering

Data preparation involves cleaning and transforming raw data into a format suitable for model training. It includes handling missing values, outlier detection, and normalization. Feature engineering involves creating new features from the existing data to improve model performance.

Both steps are crucial for building robust ML models as they directly impact the accuracy and efficiency of the models. Automating these processes within an MLOps pipeline helps in reducing human error and improves the reproducibility of experiments.

Model Development and Training

Model development and training is the core part of the MLOps pipeline, where machine learning models are built and trained on prepared data. It involves selecting an appropriate algorithm, configuring it, and optimizing its parameters to achieve the best performance.

Automated training enables experimentation with different models and parameters systematically, facilitating the identification of the most efficient models. Version control systems are often integrated at this stage to manage changes and experiments.

Model Evaluation and Validation

After training, models undergo rigorous evaluation and validation to ensure they meet predefined performance criteria. This includes analyzing the models against test datasets, evaluating metrics like accuracy, precision, and recall, and validating the model’s ability to generalize to new data.

Model evaluation and validation are crucial for preventing overfitting and ensuring the model performs well in real-world scenarios. Automated pipelines facilitate continuous testing and evaluation, allowing for iterative improvement of models.

Model Deployment

Model deployment involves moving the trained model into a live environment where it can make predictions or take actions based on new data. This can range from embedding the model into existing applications to deploying it as a standalone service.

Automated deployment within an MLOps pipeline ensures models are safely and efficiently transitioned into production, with mechanisms for rollback, should the need arise. It also ensures that model serving environments are consistent and scalable.

Monitoring and Logging

Monitoring and logging entail keeping track of deployed models’ performance over time, identifying issues like drift or degradation early. This step includes setting up alerts for performance dips and logging incidents for further analysis.

Automated monitoring and logging provide critical insights into model health and behavior in production, enabling timely interventions to maintain or improve model performance.

Related content: Read our guide to MLOps tools

Challenges of Building ML Pipelines

Building machine learning pipelines presents a unique set of challenges that can affect their efficiency, scalability, and overall success:

- Data quality and consistency: Ensuring high-quality, consistent data across the pipeline is a significant challenge. Inconsistencies, missing values, and noisy data can severely impact model performance. Establishing processes for ongoing data cleaning and validation is essential.

- Scalability: As models and data volumes grow, maintaining performance and managing resources becomes increasingly difficult. Designing pipelines that can scale efficiently with demand without compromising performance is a critical challenge.

- Integration with existing systems: Integrating ML pipelines into existing IT and software ecosystems can be complex, especially in organizations with legacy systems. Ensuring compatibility and seamless operation across different environments requires careful planning and execution.

- Talent and collaboration: Building and maintaining ML pipelines require a multidisciplinary team of data scientists, engineers, and IT professionals. Fostering collaboration and ensuring everyone has the necessary skills can be a challenge.

- Cost management: Machine learning operations can become costly, especially with the use of cloud resources, storage, and computational power. Optimizing costs without compromising the quality and speed of development is a challenge.

Considerations and Tips for Building an MLOps Pipeline

Here are a few tips that will help you build an effective MLOps pipeline.

1. Start Small and Iterate

Building a successful MLOps pipeline is a gradual process. Start with a small, manageable project to establish a foundation. Early wins provide valuable insights, helping refine strategies before scaling up.

Iterating on the initial setup allows for continuous improvements. Feedback loops should be established to incorporate learnings and adapt the pipeline for better efficiency and effectiveness over time.

2. Leverage Existing Tools and Platforms

Utilizing established tools and platforms can significantly streamline the creation and management of an MLOps pipeline.

Many cloud providers, software vendors, and open source projects offer robust solutions designed specifically for machine learning workflows, including data preprocessing, model training, deployment, and monitoring. Leveraging these tools not only accelerates the development of MLOps pipelines but also ensures that you benefit from best practices and innovations in the field.

3. Establish Version Control

Implementing version control is crucial for managing models, data sets, and code. It ensures reproducibility of experiments and simplifies the process of rolling back to previous versions if issues arise. Version control facilitates collaboration among team members, making it easier to track changes, share work, and maintain consistency across development and production environments.

4. Implement a CI/CD Pipeline

Integrating a Continuous Integration and Continuous Deployment (CI/CD) pipeline automates the process of testing, validating, and deploying models. It enhances the efficiency of delivering updates and new features, ensuring a faster turnaround.

CI/CD practices also support operational reliability by enabling thorough testing before deployment, reducing the risk of introducing errors into production systems.

5. Track Performance Metrics and Operational Metrics

Continuous tracking of both performance and operational metrics is essential for understanding and improving the behavior of ML models in production. Performance metrics focus on the accuracy and effectiveness of models, while operational metrics provide insights into system health and resource utilization.

Tracking these metrics enables proactive management of models and systems, and provide a continuous feedback loop for earlier stages of the pipeline.

6. Emphasize Security and Compliance

Ensuring security and compliance within an MLOps pipeline is paramount, especially in industries dealing with sensitive data. This includes implementing measures to protect data privacy, secure data access, and comply with relevant regulations such as GDPR or HIPAA. Encrypting data in transit and at rest, using secure authentication methods, and managing permissions meticulously are critical steps in safeguarding your ML operations.

Additionally, it’s important to incorporate security practices throughout the pipeline, from initial data collection to model deployment and monitoring. Regular security assessments and compliance audits can help identify vulnerabilities and ensure that the pipeline adheres to necessary legal and industry standards.

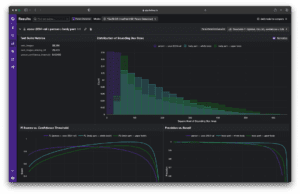

Building Your MLOps Pipeline with Kolena

Kolena offers an integrated MLOps platform designed to accelerate the deployment and management of machine learning models at scale. It simplifies complex ML workflows, from data prep to model production, with a focus on explainability, continuous testing, and monitoring for ML models.

Kolena integrates seamlessly with existing data sources and infrastructure, providing a unified environment for end-to-end machine learning operations. Kolena’s automated pipelines and pre-built templates help streamline and automate ML workflows.